The Criticality of Trusted Execution Environments in Linux-based Embedded Systems

Throughout civilization, trust has been the glue that binds us together. Long before there were computers, there was trust. Without trust, relationships decay. Computers don’t change that. In fact, digital trust is every bit as important as personal trust. Digital trust means having confidence in the truth and reliability of the data. To provide digital trust, systems designers must understand the tools available and methods to use those tools. One such tool for security engineers is called Trusted Execution Environments or TEE. Perhaps it is obvious to most by now, but computers, by themselves, are not particularly trustworthy. But can they be? Trusted Execution Environments provide perhaps the key component of secure systems. As approaches for secure environments have matured, the need for trusted execution environments (TEE) have become clear. The problem with TEE is there is not just one approach nor is there a generic set of requirements for them to address, nor is there a standard method of interaction between applications and the TEE. This creates a fair bit of confusion from system developers as to whether they could benefit from a TEE, and if so, how to design systems with such an environment in mind.

Trusted Execution Environments is a big topic. Our goal is to to introduce this topic, discuss what TEEs provide to systems designers and why you might want to use one. Follow on blogs will highlight the various architectures and implementations, how TEEs are integrated within Linux, why you want to utilize the Linux framework, and some approaches to consider when designing in this environment.

This blog will address:

Functions provided by a Trusted Environment:

Private Keystore

Digital Signing and Verification

Generation/Import Asymmetric Keys

Symmetric Primitives

Access Control

Attestation

Why is it needed and what are some use cases?

Key Management

Trust Verification

Secure Boot

Secure Storage

There are many other blogs and whitepapers that discuss many of these topics. It is not our intent to simply rephrase and republish existing works, but rather to provide a discussion from the perspective of Embedded Linux systems development. We will reference select resources for context and further reading.

There are a lot of concepts wrapped up in that question. To begin, let’s start with the concept of a “Rich Execution Environment” (REE). The typical environment that a user mode process (i.e., application) runs within is considered a REE. It has access to many parts of the system: filesystems, network, IPC mechanisms, system calls, GUIs. There is some level of security: files have permissions or Access Control Lists (ACLs), network communications may be wrapped within TLS, and shared memory and platform pipes have system protection mechanisms built in. Memory is private to cooperating applications, system calls are classified by root access requirements. Add on features like system call filtering, and SELinux can further secure the operating environment.

All these protections, though, don’t go far enough. Mostly because someone with physical access to the system has ways to defeat all those protections. With physical access, you can snoop on memory or an I/O bus, reboot the system into a maintenance mode, or install/reinstall software into NVRAM.

Furthermore, a malicious user doesn’t even need physical access. They may have access to known or unknown exploits or may be able to co-opt existing hardware and DMA channels. They can hide within seemingly trusted code and intercept system calls, access network traffic while it is in cleartext, or even worse, access secrets such as private keys or passwords in cleartext.

As part of securing systems according to the “10 Properties of Secure Embedded Systems”, one can minimize attacks with #5, Attack Surface Reduction, which implies Trusted Environments. Ultimately, this is one of the most effective ways to ensure security.

A trusted execution environment (TEE) can only run firmware with known signatures. Contents of executable memory are restricted and rooted in hardware, and only validated software can be loaded. Secrets are only available within a trusted environment and are never visible outside. System busses are either hidden / buried within silicon or are encrypted with secrets only known within hardware so that they cannot be snooped. A trusted environment is like a black box. It can be observed from the outside but content within the environment is not visible except to the inside.

Code within a trusted environment cannot be changed without cooperation of the developer of the device or owner of the root of trust. The attack surface of a trusted environment is essentially nil or distills down to a single highly guarded secret. These secrets need to be protected. Methods for protecting these keys are beyond the scope of this blog but don’t invest in an elaborate security system if you’re going to leave the key under the mat.

What does a Trusted Execution Environment provide?

In the most general sense, a TEE can provide anything you want it to provide. Keep in mind though that the interface to the TEE is not trusted. Remember, the interface to the TEE is between the TEE and the REE and since the REE is not trusted, neither is the interface. So, say we want to hide a phone number in the TEE. For the phone number to be secret, it would mean it could never be exposed outside of the TEE or we need to extend the TEE by other means.

So, let’s say we have a phone (a “trusted” phone) and we want to place a call to our secret phone number. Having an API to the TEE that returns the phone number and then passing that phone number to the trusted phone would not be a trusted solution because the phone number would be exposed in the REE. Instead, we could ask the TEE to return the number in an encrypted packet with a key shared by the TEE and the trusted phone. The API to the trusted environment should consider all the requirements and considerations as outlined in the ten principals. In this case, the API would provide a call with an id that represents the phone number, and the return would be an encrypted packet which we provide to our phone. It should be obvious here, but the ID sent over the API to retrieve the phone number is untrusted data and needs to be properly validated before use. Neither the phone number itself, nor the key used to encrypt the number are ever shared outside of the TEE or the trusted phone. That would be a trusted solution.

Let’s take another example. Let’s say we have a log message that we want to send to a secure log server. The log message is received from the Linux journald facilty. The journald facility, although protected by system permissions, is not a trusted facility. Which means the log message itself cannot be considered trusted. But we may want to communicate it to the log server anyway and it’ll be sufficent if the log server can simply trust that the message came from us. In other words, the server wants us to prove who we are. We may choose to encrypt or sign the log message. Let’s say we’ll sign the message using a private key and the log server has a public key certificate that identifies our system. If we read the private key off our storage device and used it to sign our log message, the key would be visible on disk to anyone with physical access to the device. Therefore, the key is untrusted, and we would be unable to provide trust to the server that the message is coming from us. Ok, so let’s say we decided to encrypt the private key in storage. When we want to sign the log message, we’ll decrypt the private key, and then sign the log message. That’s still not good enough. It’s a little better because the private key is no longer living in our storage in the clear, but since we decrypted it in non-trusted memory, there may be exploits that can access it. Therefore, we can no longer assume that the key is trusted. What we can do is to use an API to the TEE that will sign the log message for us. The call into the TEE would take the log message and some private key identifier. The TEE would sign the message and pass it back to us. We could then send the log message to the server and assuming it used a trusted method to validate the signature, the server could be assured that the message came from us.

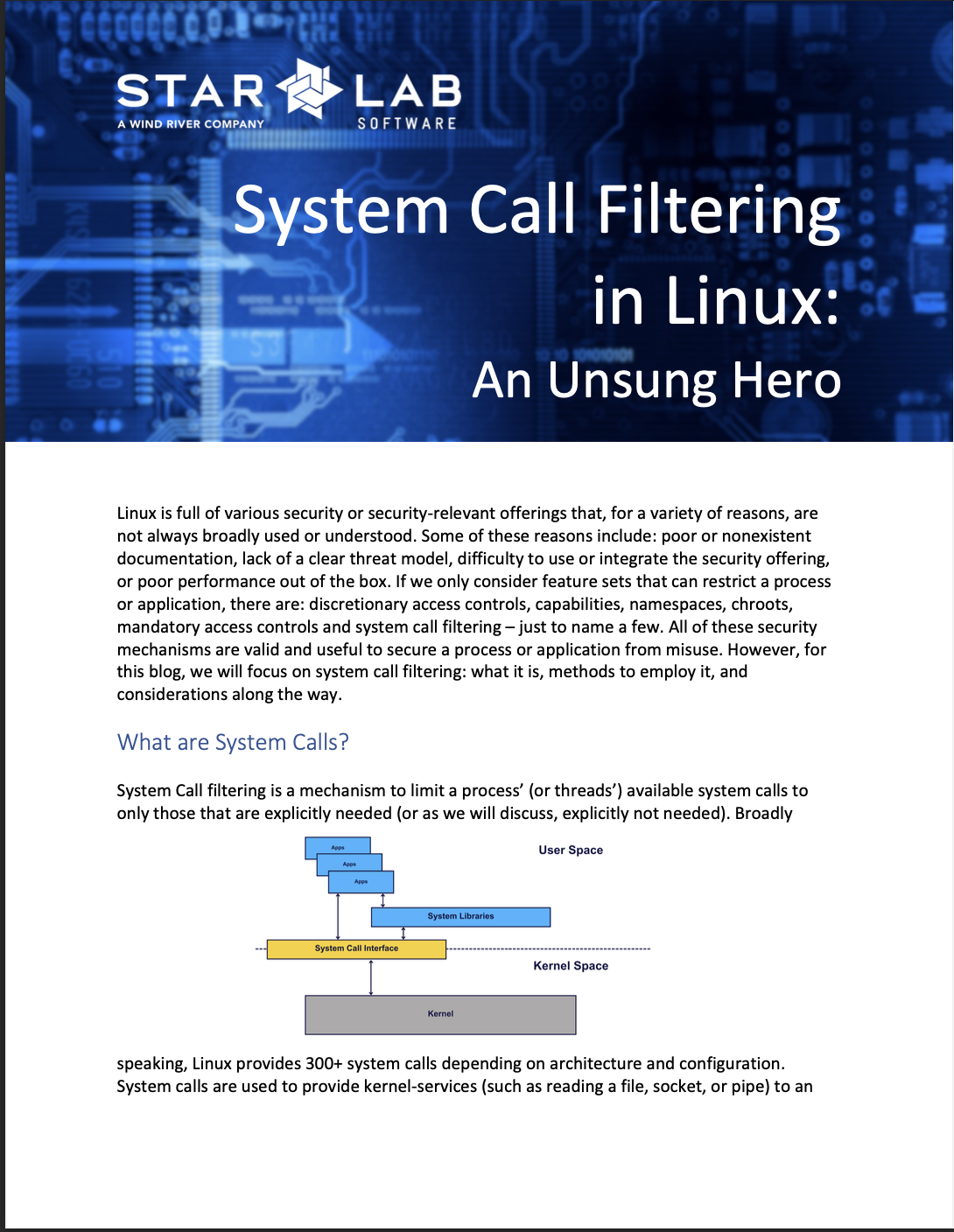

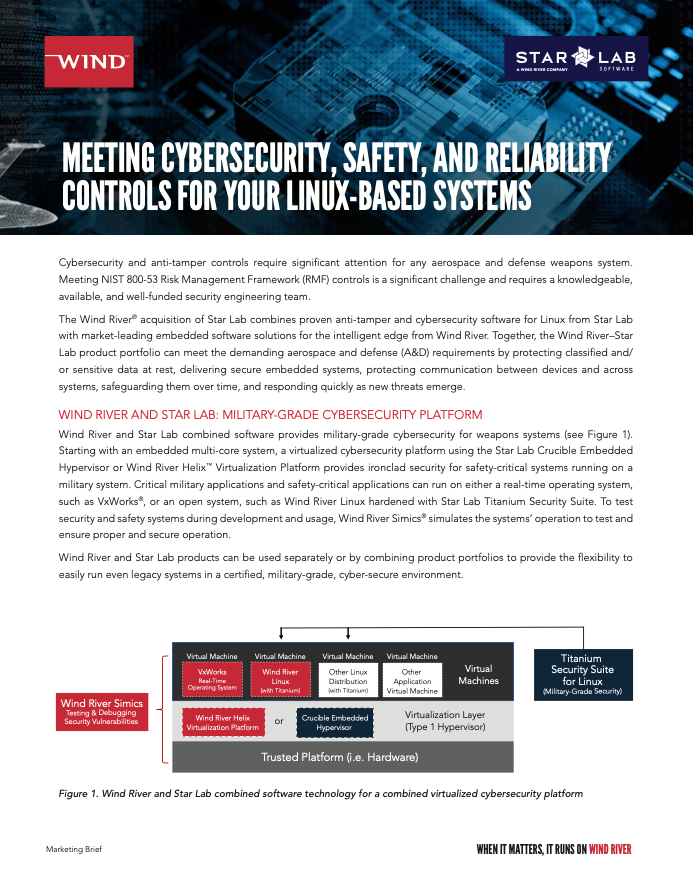

This is depicted in the picture above, where an unsigned message (in light green) is passed to the trusted environment which has sole access on the system to the private key used to sign the message. The signed message (in dark green) is passed back to the rsyslog client which in turn forwards the signed message to the syslog server. Note that the key is never exposed in untrusted space.

As you can imagine, the uses of a Trusted Execution Environment are nearly unlimited. Essentially, anything you want to keep secret or out-of-band can be handled by a TEE, keeping in mind that whatever you want to keep secret can only be exposed within the TEE or transferred in a trusted manner to another trusted device. This trusted transfer to a trusted device is another use of a TEE. This is referred to as Secure Peripheral Access.

For another example, let’s say we have a picture of a “super top secret” time machine. Assume it was taken by a “super top -secret camera”, encrypted with a “super top -secret key” that only the camera and our TEE knows. Since the image is encrypted with a trusted key, it can be uploaded to the storage on our system. The image will exist in system storage as a file. The file itself is not trusted, but the contents of that file are by nature of it being encrypted. How can we view this image in a trusted manner?

Let’s say our TEE supports Secure Peripheral Access to an image viewer. The image viewer is a trusted device that has a trusted interconnect between our TEE and the actual pixels in the viewer. Consider the trusted interconnect to be encrypted thunderbolt or similar and both the TEE and the image viewer share the encryption keys. The TEE could provide an API that displays the “super top secret” image of the super top secret time machine. The API would provide a call to display an image on the image viewer along with the path to the encrypted image. The TEE could decrypt the image, re-encrypt it with the thunderbolt keys and send it to the trusted viewer. Except for sneaky people looking over your shoulder, the actual image was never visible outside of a TEE.

One of the most prevalent uses of a trusted execution environment is for attestation. Attestation is a fancy word for ensuring the validity of a resource. We used this above when discussing the signing of our log messages. Something increasingly important in most embedded devices is to ensure that the software that the device is running is what is expected to be running. This is important for a multitude of reasons. For instance, Android or iOS smartphones. The carriers don’t want to let an “untrusted” device on the cellular network. This assurance can go beyond the device itself where the device communicates to the carrier certain secure assets such as hardware or software configurations which the carrier can use to make a determination on the integrity of the device. This is called remote attestation and requires the assets be communicated in a trusted manner.

Cell phone users don’t want malware running that is snooping at passwords or stealing private content. How can that phone ensure that it is running known validated software? This becomes a larger topic sometimes called “secure boot” and can involve a “chain of trust” where something in your phone establishes a “root of trust” and then a series of stages where software is validated, establishing a trust “link” in the chain. Application content can be trusted if it is validated by a trust link which itself has been validated by a trust link all the way up to the root-of-trust or trust anchor. A TEE assists in establishing the validity of each link in the chain and in passing that trust to the next link in the chain.

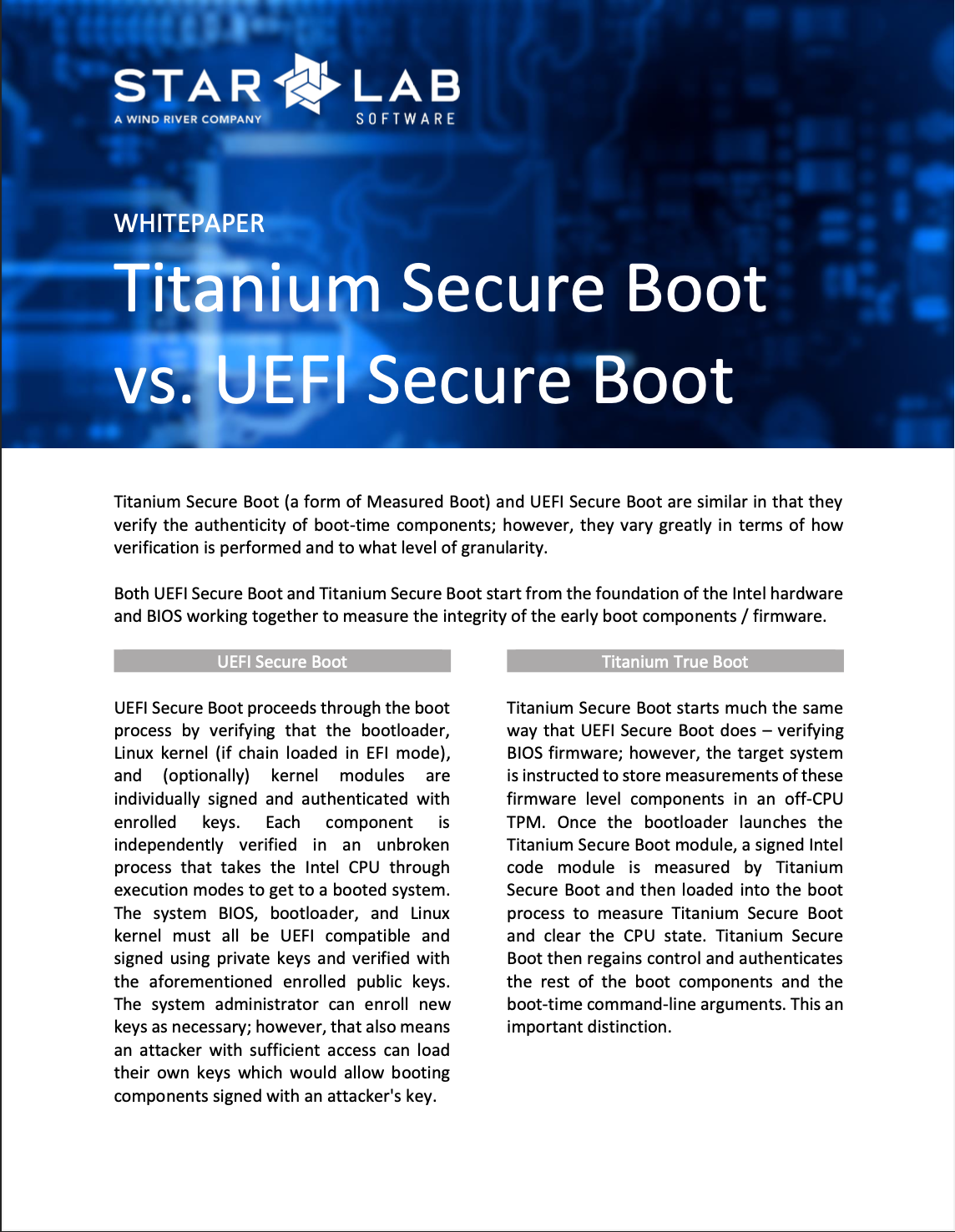

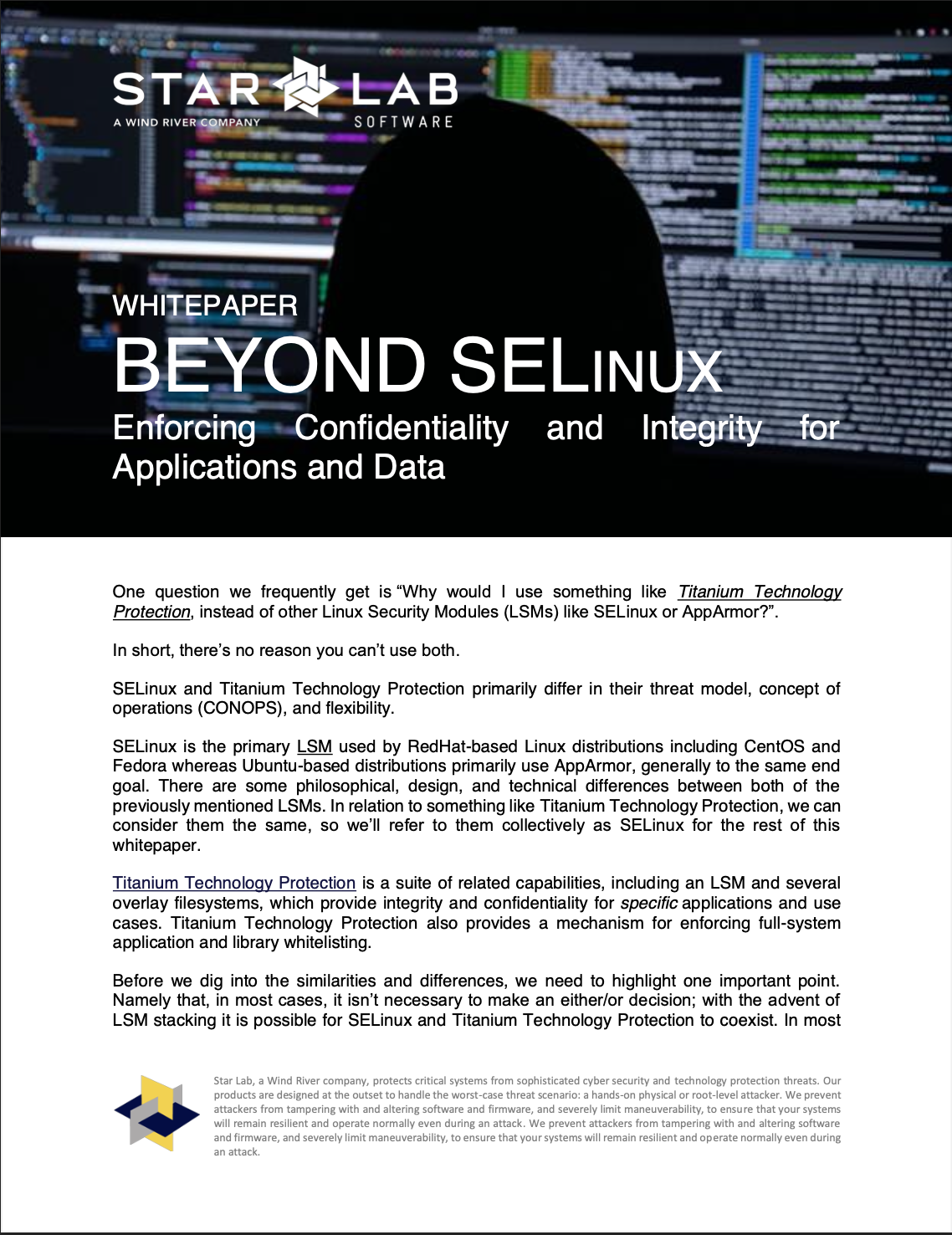

Hardware and software architectures can implement this chain of trust in different ways. An entire blog could be written on secure boot. The following diagram is meant to show a basic flow through establishment of a chain of trust.

Another real-world use case for Trusted Execution Environments that I bet you walk around with every day without knowing it is credit cards with embedded security chips. That chip is a trusted execution environment! Its contents cannot be decoded. Its contents can also not be changed. Besides algorithms to assist in verifying transactions, it contains a code unique to the card. When you type in your pin on the POS terminal, it is presented to the TEE along with a unique code (a nonce) provided by the financial institution. What comes out of the smart card is a unique sequence of digits, impossible to spoof, unique to that card, that PIN, and that transaction. When provided back to the financial institution, it can be trusted that that card, and that pin, were used at that terminal at that time. Unfortunately, it cannot guarantee that someone else doesn’t have your card and know your pin. Queue up biometrics. What it completely protects against though is someone who knows your pin but doesn’t have your card, or someone who has your card but doesn’t know your pin from using the info. Ingenious.

Do you need a Trusted Execution Environment?

Increasingly, the answer is yes, in almost all cases. That’s somewhat a bold statement. But consider the most mundane application, for example, a simple calculator. It’s not so much that the application is secret, but no one knows what that calculator will be called on to do. One examle is the “salami slicing” hack, where a financial application rounded all fraction of a cent down to the nearest cent and placed the remainder in a seperate account that was able to accumulate. Someone using the calculator may not even know that the results have been tampered with. In the case of physical calculators, they are considered trusted because they are are a hardware device and the firmware is hardwired into the device. Software based calculators cannot be considered secure unless they are validated.

Businesses and individuals are constantly bombarded with attempts to steal our data, provide customer information, reveal passwords, or expose financial data. Each of us controls a treasure trove of digital assets that malicious actors would love to get a hold of. We depend on trust. We can’t determine for ourselves whether a particular app, or device can be trusted. Not only do we not have all the info to make that determination, but most of us wouldn’t also know how to decide whether a device or application can be trusted, even if we are given all the information. Device manufacturers are relied upon to provide the trusted environment for their devices. Our trust depends on that.

The world of IoT and embedded devices needs to be especially concerned about creating trusted assets. These devices are purpose-built with specific functions that must be secure and untampered with. Without a TEE, you can get close to a trusted environment, but you cannot fully achieve it. Ultimately, a Trusted Execution Environment is a requirement for all embedded applications.

With the maturity of hardware architectures and the integration of software frameworks with embedded OS's (OP-TEE for Linux), there few obstacles for making the most of trusted execution environments in your next development project. See the following references for perspectives on Trusted Environments and other blogs from Star Lab for ways that can assist in deploying defense in depth for your products.

Further Reading

How abuse of identity services threatens the US’ supply chain

How design flaws in some TEEs can still lead to vulnerabilities

To learn more about how Star Lab’s technologies can secure your embedded system, contact us.