An Introduction to Dm-verity in Embedded Device Security

Most security mechanisms in Linux are focused on protecting users while the system is powered on. This makes sense, since most computing happens while the computer is powered on, but there is an entire class of attacks that can occur while the system is off. Imagine an attacker removes the hard drive, makes changes to it, and replaces it. How can we detect and defend the operating system’s code against such an attack? The answer is by using a of file system integrity scheme. (The more general problem of preventing an attacker from changing user data or extracting secrets is a discussion for another time.)

There are several approaches to file system integrity available in Linux, each with its own capabilities and constraints. There is no single best solution for all types of systems. Today, we will focus on one in particular: dm-verity.

About dm-verity

Dm-verity was introduced into the Linux kernel in version 3.4. Surprisingly, it is a widely deployed technology: Used by Android to protect its system partition since version 4.4, (released in late 2013) it is used daily on billions of embedded devices worldwide. Dm-verity provides disk integrity with minimal overhead and is transparent to applications: They don’t even have to know that dm-verity is in use.

While we’ll look at the price to pay for dm-verity in more detail later, the price is minimal. It introduces very little runtime overhead, and very little extra space is needed. As a concrete example, dm-verity requires only 81MB of extra space for a 10GB disk. Although beyond the scope of this post, dm-verity can also include forward error correction, allowing it to not only detect corruption, but also transparently correct it.

The one caveat to mention up front is that in all cases, dm-verity is expected to be paired with some sort of secure boot scheme. If an attacker can control the boot process, then they can easily subvert dm-verity. So in everything that follows, we assume we have working secure boot on whatever device we use, which is, we realize, “draw the rest of the owl” advice.

How dm-verity works

The concept behind dm-verity is actually quite simple. Divide the disk into blocks and for each block, record the hash of the data stored within. When it’s time to read data from disk, verify each block read against the hash that was calculated earlier. This is extremely fast and the hashes don’t take up much extra space. It gets interesting when the following questions are considered: where are the hashes stored when the computer is turned off, and how do we know we can trust these hashes haven’t been tampered with when we load them back in?

To make things easier, when dm-verity is protecting a disk, the kernel prevents anyone from writing to that device. In other words, dm-verity disks are always read-only, which is one of its distinguishing features from other filesystem integrity schemes. In this way dm-verity provides online integrity as well, and while there’s nothing terribly exciting about setting a disk read-only, this provides an additional level of assurance that an attacker cannot modify the system either online or at rest.

Now on to the fun part: How should hashes be protected with dm-verity? Well, we treat our table of hashes like a disk and protect it with dm-verity! Put another way, we group our hashes into blocks (128 hashes per block using default values) and record the hash of each block. This produces a table of hashes like the one we had before, only smaller. We repeat this process, producing smaller tables until we have only a single hash to record: the root hash.

This construction is known as a Merkle tree. No data on disk or hash in the tree can be altered without also altering the root hash, so if we know what the root hash should be, we can verify that everything is as it should be. The root hash needs to be protected from modification, but it doesn’t need to be a secret.

The Merkle tree construction lets us verify only the hashes we need, as we need them, rather than verifying everything all at once. Especially on an embedded system, the all-at-once method could cause a noticeable delay on startup. It also provides flexibility in that corruption in one part of the disk doesn’t affect other parts of the disk (though we can treat it that way if we wish).

Curious how dm-verity can help secure your embedded device? Reach out to us!

A simple example

To walk through a tangible example, let’s create a dm-verity protected disk. We’ll need a data partition to protect, of course, but also somewhere to store the hashes. We have two options for this: store them in separate data and hash partitions, or store the hashes in unformatted space at the end of the data partition. For simplicity, we’ll do the former here. Note that the root hash itself is not stored anywhere on disk: we must provide that value to the kernel ourselves.

First, create a stand-in data partition and fill it with a few files:

root# truncate -s 10G data_partition.img root# mkfs -t ext4 data_partition.img root# mkdir mnt root# mount -o loop data_partition.img mnt/ root# echo "hello" > mnt/one.txt root# echo "integrity" > mnt/two.txt root# umount mnt/ Next, we’ll create a stand-in partition to hold the hash data:

root# truncate -s 100M hash_partition.img Then we will hash the data and store it to our hash partition. Below, we are using the --debug flag to illustrate things like the number of hash tree levels and the ultimate disk space required:

root# veritysetup -v --debug format data_partition.img hash_partition.img # cryptsetup 2.2.2 processing "veritysetup -v --debug format data_partition.img hash_partition.img" # Running command format. # Allocating context for crypt device hash_partition.img. # Trying to open and read device hash_partition.img with direct-io. # Initialising device-mapper backend library. # Formatting device hash_partition.img as type VERITY. # Crypto backend (OpenSSL 1.1.1f 31 Mar 2020) initialized in cryptsetup library version 2.2.2. # Detected kernel Linux 5.13.0-52-generic x86_64. # Setting ciphertext data device to data_partition.img. # Trying to open and read device data_partition.img with direct-io. # Hash creation sha256, data device data_partition.img, data blocks 2621440, hash_device hash_partition.img, offset 1. # Using 4 hash levels. # Data device size required: 10737418240 bytes. # Hash device size required: 84557824 bytes. # Updating VERITY header of size 512 on device hash_partition.img, offset 0. VERITY header information for hash_partition.img UUID: 4da1ecb5-5111-4922-8747-5e867036d9de Hash type: 1 Data blocks: 2621440 Data block size: 4096 Hash block size: 4096 Hash algorithm: sha256 Salt: f2790cf141405152cf61b6eb176128ad0676b41524dd32ac39760d3be2d495cf Root hash: a2a8fd07889deb10b4cdf53c01637ed373212cd7d0877a8aa9ae6fd4240f0f71 # Releasing crypt device hash_partition.img context. # Releasing device-mapper backend. # Closing read write fd for hash_partition.img. Command successful. We will need to copy the root hash for later. At this point, even a single bit change to data_partition.img will result in errors, so don’t touch it! The data_partition.img file contains a regular ext4 file system, and hash_partition.img contains the entire Merkle tree we discussed before, minus the root hash. See the the cryptsetup wiki for a more detailed description of the format and algorithms used in the hash partition.

Now we can mount the data partition. We need to choose a device mapper name, which can be anything. For this example, “verity-test” is used as shown in the commands below.

root# veritysetup open \ > data_partition.img \ > verity-test \ > hash_partition.img \ > a2a8fd07889deb10b4cdf53c01637ed373212cd7d0877a8aa9ae6fd4240f0f71 The above command is the one that needs to be protected by secure boot.

At this point you should see /dev/mapper/verity-test appear in the file system. All we need to do is mount it like a regular disk, and we have a protected file system!

root# mkdir mnt root# mount /dev/mapper/verity-test mnt/ root# cat mnt/one.txt mnt/two.txt hello integrity Download now

Building Security into Embedded Linux Implementations

This paper demonstrates how to go beyond bolt-on prevention tactics to build security into your systems from the start, so they are resilient to successful attacks. You will discover how to make systems secure by design with security solutions that are flexible and easy to integrate during the development phase.

How exactly did it work?

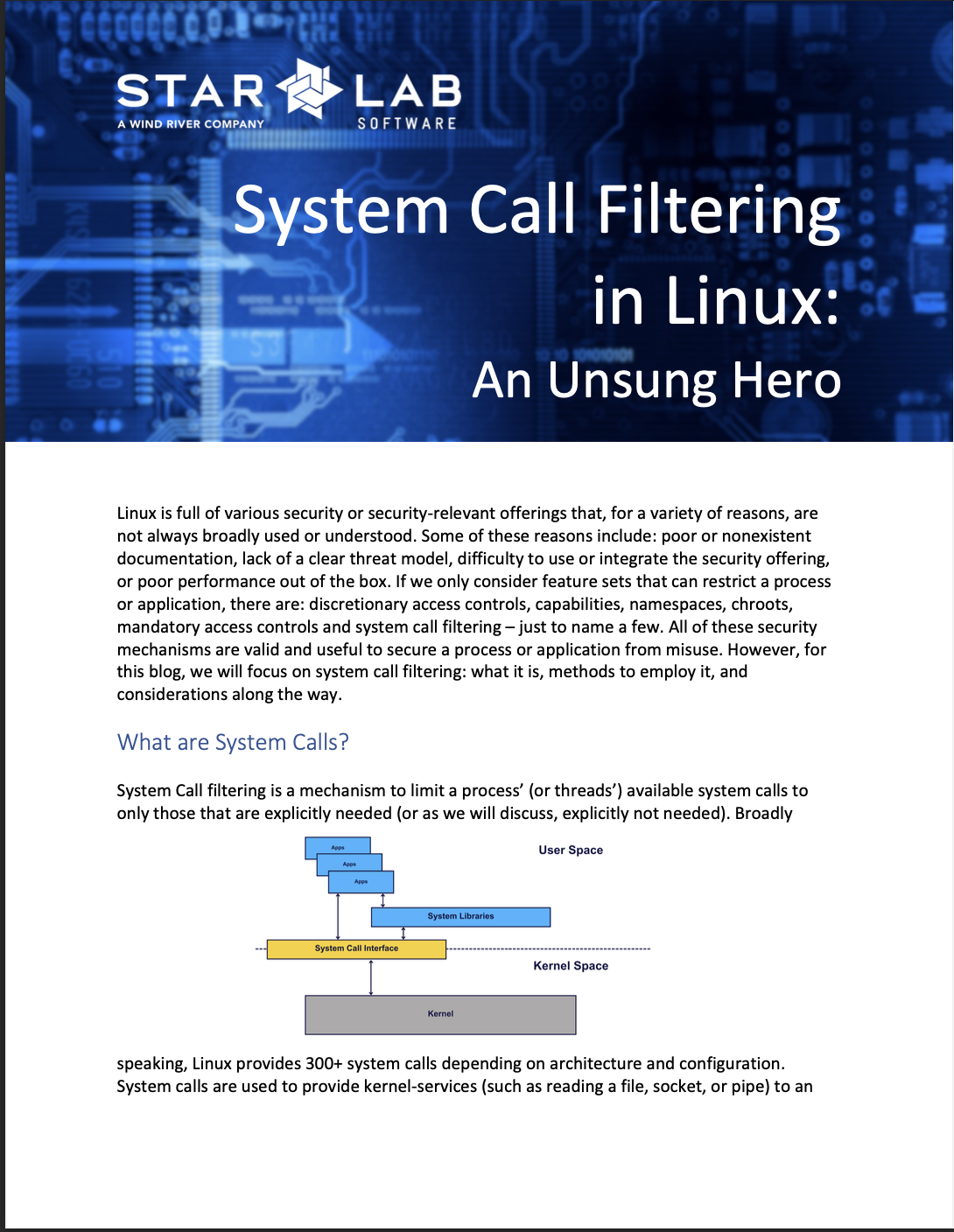

Above, it certainly didn’t look like anything fancy happened, so what is going on under the hood? Let’s start with what happens when cat reads one.txt:

We haven’t read this file before, so the kernel loads the data from disk (1). Then it loads the block containing the hash it needs from disk (2). Since this is the first time the hashes have been loaded from disk, the hashes also need to be verified. The kernel reads the next level down to find the hash of the block it just read (3). That hasn’t been verified either, so we read another level down (4). That level is just a single block, verified using the root hash we gave to the kernel when we mounted the file system. This first disk access incurred 4 reads (one data, three hash blocks) and 4 SHA256 hashes. This is the worst-case scenario and will mostly only happen right after the dm-verity protected disk is mounted.

Let’s read the same file again! This time the file’s data is in the page cache and the kernel is smart enough to know that it’s already been verified (1). So the data is returned with zero reads and zero hashes. However, if the file hasn’t been accessed in a while, the kernel might evict that page from the page cache to free up RAM for other uses. In that case, the data will need to be re-read from disk and re-validated. (Dm-verity does provide an option to only validate on the very first read. This provides weaker security but slightly increased performance.)

Let’s read another file, and for illustration, we’ll assume that it is physically near the one we just read. We read the file from disk (1) and find the hash value for that data block (2). Luckily for us, the hash we need is located in the same hash block as the last file, and it’s still in the page cache. Since it’s in the page cache, we know we can trust it, and there’s no further work to be done. One read, one hash.

Like the file data, the hash block can also be evicted from the page cache if the kernel needs the RAM for something else. In that case, we re-read and re-verify the blocks in all levels of the tree as before, but we can skip reading and verification for the blocks that are still in the page cache.

Dealing with corruption

Because dm-verity protects the disk at a binary level, even a single bit change anywhere in the disk will cause dm-verity to raise errors. In fact, mounting the disk read-write is enough to make dm-verity mad due to small changes in metadata that get written to disk. To demonstrate,

First, let’s tear down our dm-verity device from before:

root# umount mnt/ root# veritysetup close verity-test Now we will mount read-write, but make no other changes.

root# mount -o loop data_partition.img mnt/ root# umount mnt/ We will encounter errors when we attempt to re-mount:

root# veritysetup open \ > data_partition.img \ > verity-test \ > hash_partition.img \ > a2a8fd07889deb10b4cdf53c01637ed373212cd7d0877a8aa9ae6fd4240f0f71 Verity device detected corruption after activation root# mount /dev/mapper/verity-test mnt/ mount: /path/to/mnt: can't read superblock on /dev/mapper/verity-testroot## mount /dev/mapper/verity-test mnt/ root# dmesg ... [412036.212897] device-mapper: verity: 7:16: data block 0 is corrupted [412036.212996] device-mapper: verity: 7:16: data block 0 is corrupted [412036.213009] buffer_io_error: 91 callbacks suppressed [412036.213011] Buffer I/O error on dev dm-0, logical block 0, async page read [412036.223697] device-mapper: verity: 7:16: data block 0 is corrupted ...Why not dm-verity?

Just as with any technology, dm-verity is not suitable for every use case. The most likely incompatibility is that a dm-verity protected disk is read-only. This works quite well for an embedded device with a read-only system partition and a read-write data partition, but it’s an awkward fit for a conventional server or workstation setup. (Note that it is possible to get dm-verity working on distros such as Debian or RedHat with the aid of a custom partitioning scheme and a bit of initramfs work.)

The second noteworthy consideration is system updates. Updates will have to be performed offline with dm-verity disabled. In an embedded system where you would expect identical system partitions for each device, block-based updates work well for this, allowing us to update the file system data and the hashes all at once. Since we know the exact disk layout of each firmware version, the updated root hash can be calculated ahead of time. This is the route taken by Android, and other embedded Linux devices.

File-based updates, such as rpm or apt that are more common in desktop/server distros, can also be problematic. These do not guarantee a consistent order of writes, timestamps, or other file metadata, so each filesystem ends up unique. This means that each device will need to recalculate its own root hash after each update. For a large disk, this can take some time. A further complication is that the hash needs to be protected by secure boot, so it will somehow need to be updated securely, possibly re-signing various boot components while securely handling any relevant key material.

Alternatives to dm-verity

For the sake of comparison, we’ll briefly describe some of the differences of IMA, Dm-crypt, and Fsverity from dm-verity.

Dm-crypt

Full-disk encryption with dm-crypt (assuming an AED cipher or HMAC scheme), can provide largely the same function as dm-verity for offline protection. Additionally, it provides confidentiality, so that an attacker cannot read sensitive information. There are, however, a few drawbacks.

The first drawback is that the encryption key needs to be kept a secret. This will require a TPM, a user password entered at each boot, or some other method of securely providing or storing the key material. This is not suitable for every use case: small embedded devices or short-lived cloud images come to mind. By contrast, the dm-verity root hash needs to be protected from tampering, but it does not need to be a secret, providing much more flexibility.

The second drawback is performance. Dm-verity only needs to calculate one or two hashes and will always be much faster than an encryption algorithm. Even though dm-verity occasionally requires extra disk reads, it will still work out faster over the course of normal operation.

Dm-crypt allows the partition to be modified at runtime. While this is often desirable, it also means that an attacker has an avenue for persistently modifying code or configuration. An attacker with root privileges can easily add software or rootkits for later access.

The final drawback is that dm-crypt is not very suitable for protecting a fleet of identical devices, since devices should never share encryption keys. So embedded devices rolling off the assembly line or an image intended for use in multiple virtual machines would need some mechanism for re-encrypting prior to deployment.

IMA/EVM

The Linux kernel’s integrity measurement architecture (IMA) has seen significant progress in the Linux kernel in recent years. IMA, like dm-verity, measures files against expected hashes, though it measures individual files rather than disk blocks. IMA is a powerful, but complicated system with many moving parts and an overarching IMA policy that is often left as an exercise for the reader. In fact, many guides for setting up IMA warn of various types of unexpected behavior. IMA also does not necessarily protect every file on a partition unless configured to do so. Dm-verity, by contrast, is significantly simpler to reason about.

IMA alone does not provide offline integrity. It stores integrity on disk as extended attributes, which can be modified. To provide offline integrity, you would turn to the Extended Verification Module (EVM) which stores HMACs of the integrity data, protected with a secret key. This secret key needs to be unique per device and protected the same way as a dm-crypt encryption key, so this is also not practical for many use cases.

Fs-verity

Fs-verity is very much like dm-verity, but per-file rather than per-disk. It requires special file system support and is meant to work in conjunction with (from the kernel docs) “trusted userspace code (e.g., operating system code running on a read-only partition that is itself authenticated by dm-verity).” Fs-verity is also limited to verifying regular file contents. It cannot protect metadata or directories. It is geared towards protecting a few large files such as Android APKs.

Hopefully this introduction to dm-verity provides a foundation for further research and learning about file system integrity solutions for Linux-based systems. Filesystem integrity is an increasingly critical security measure as software and data utilized in edge devices grows.

Further Reading

To read on about these topics, we recommend the following: